I have started experimenting with a blocky voxel renderer à la Minecraft. This post describes and compares algorithms to go from a voxel representation to a triangle mesh. I take some terminology from this great blog article where the author introduces three meshing algorithms: stupid, naive and greedy. The “stupid” algorithm generates six faces for every filled voxel. The “naive” meshing algorithm culls faces that cannot be seen because they are between two filled voxels. The “greedy” meshing algorithm merges faces to further reduce their number.

The same author has also written another blog post that compares different voxel representations. I agree with this blog post’s claim that for a voxel representation iteration is more important than random access. But I disagree that octrees are bad for iteration. Iterating through an octree touches every node exactly once and is therefore linear in the number of leafs.

We will compare the “stupid” and “naive” meshing algorithms on a static grid representation and an octree representation.

Octrees

The number of leafs in an octree is much lower than the number of voxels in a grid. Consider the following two voxel scenes:

The left is a ball of resolution 64^3 and the right is a 64^3 sampled trigonometric function taken from here.

The following table shows the number of voxels in a grid versus the number of leafs in an octree for different resolutions. The number of voxels is independent of the scene. The number of leafs is shown for the two example scenes above.

| Resolution | Voxels | Leafs/Ball | Leafs/Function |

|---|---|---|---|

| 8 | 512 | 232 | 225 |

| 16 | 4,096 | 1,408 | 1,443 |

| 32 | 32,768 | 5,944 | 6,924 |

| 64 | 262,144 | 24,760 | 33,860 |

| 128 | 2,097,152 | 98,848 | 140,260 |

| 256 | 16,777,216 | 408,808 | 560,148 |

The number of voxels is way higher than the number of leafs, especially as the resolution grows. This is not only a waste of space but also of time. If you want to iterate through a grid you have to touch every single voxel. But with octrees you can run through its leafs and never look at individual voxels.

Octree definition

We will work with the following definition of an octree:

data Octree a =

Full a |

Children (Oct (Octree a))

data Oct a = Oct a a a a a a a aAn Octree a is either full and carries a value of type a or it has children. The children are held in an Oct. An Oct a is an 8-tuple of values of type a. For example this octree is subdivided once and has eight children filled with different letters:

exampleOctree :: Octree Char

exampleOctree = Children (Oct

(Full 'a') (Full 'b') (Full 'a') (Full 'a')

(Full 'c') (Full 'a') (Full 'a') (Full 'c'))We will have to enumerate all values in an octree. The enumerate function takes an octree and produces a list of all its leaf values.

enumerate :: Octree a -> [a]

enumerate (Full a) =

[a]

enumerate (Children children) =

concatMap enumerate (octToList children)

octToList :: Oct a -> [a]

octToList (Oct a0 a1 a2 a3 a4 a5 a6 a7) =

[a0, a1, a2, a3, a4, a5, a6, a7]If we have a full octree we produce the value in a singleton list. If the given octree has children we recursively enumerate all children and concatenate the results.

Paths

We will also need to know where an octree’s leafs are located in space. We can uniquely identify a node in an octree with its path from the root. We introduce a Path data type (sometimes called locational code):

data Path = Path Resolution Location

type Resolution = Int

type Location = V3 IntA path consists of four numbers: its resolution and its location’s three coordinates.

In the following image you can see quads with their corresponding quadtree paths:

In a quadtree a path consists of only three numbers: a resolution and two coordinates.

We will have to annotate each leaf in an octree with its path:

annotatePath :: Path -> Octree a -> Octree (Path, a)

annotatePath path (Full a) =

Full (path, a)

annotatePath path (Children children) =

Children (zipOctWith annotatePath (childPaths path) children)Again we have two cases. If the given octree is completely filled with a value a we return the given path paired with this value. If the given octree is subdivided we recursively annotate all its children. We zip the children with an Oct Path containing the immediate children’s paths.

Cubes

Every octree path denotes a 3D cube. A cube consists of a size and a position.

data Cube = Cube Float (V3 Float)We get the cube corresponding to a path with the pathCube function:

pathCube :: Path -> Cube

pathCube (Path resolution location) =

Cube size position where

size = recip (realToFrac resolution)

position = size *^ fmap realToFrac locationThe size of the cube is the reciprocal resolution (as a Float) and the position is the location scaled by the size. For example the path Path 4 (V3 1 0 0) corresponds to the cube Cube 0.25 (V3 0.25 0 0).

With these definitions we can start meshing.

Meshing

We are going to work with the following block type:

data Block = Air | SolidA block is either filled with air or it is solid.

A mesh is a list of faces. A face is a 3D quad that has a position and is spanned by two vectors.

data Face = Face (V3 Float) (V3 Float) (V3 Float)Our meshing functions will have type Octree Block -> [Face]. They will take an octree of blocks and produce a list of faces.

Stupid octree meshing

“Stupid meshing” is a technical term that means we generate six faces for every solid voxel. This results in lot’s of faces that can never be seen because they are hidden by other solid blocks. Here is a screenshot:

On the left you see a very simple voxel scene. On the right you see the same scene in wireframe mode.

Ok, now let’s implement stupid meshing for octrees:

stupidMesh :: Octree Block -> [Face]

stupidMesh octree =

concatMap leafFaces (enumerate (annotatePath rootPath octree))

leafFaces :: (Path, Block) -> [Face]

leafFaces (_, Air) =

[]

leafFaces (path, Solid) =

cubeFaces (pathCube path)The stupid meshing algorithm enumerates all leafs in the octree annotated with their paths. It applys the leafFaces function to each annotated leaf and concatenates the results.

The leafFaces function takes a pair of a path and a block and returns a list of faces. If the block type is Air we generate an empty list of faces. If the block type is Solid we generate the list of six faces of the cube corresponding to the path.

Later we’ll compare this meshing algorithm’s performace to others.

Naive octree meshing

“Naive meshing” means that we generate a face only when a solid block is adjacent to a transparent one. In other words: no inside faces. Here are two screenshots:

They are taken from inside of a completely solid sphere. Triangles are only generated where a solid block touches an air block.

The essence of the algorithm is the neighbour function. Given an octree and its direct neighbour in positive X direction it annotates every value in the given octree with its neighbour’s value.

neighbour :: Octree a -> Octree a -> Octree (a, a)

neighbour (Full value) (Full neighbourValue) =

Full (value, neighbourValue)

neighbour (Full value) (Children neighbourChildren) =

neighbour

(Children (homogeneousOct (Full value)))

(Children neighbourChildren)

neighbour (Children children) (Full neighbourValue) =

neighbour

(Children children)

(Children (homogeneousOct (Full neighbourValue)))

neighbour (Children children) (Children neighbourChildren) =

Children (zipOctWith neighbour children newNeighbours) where

(Oct _ c1 _ c3 _ c5 _ c7) = children

(Oct n0 _ n2 _ n4 _ n6 _) = neighbourChildren

newNeighbours = Oct c1 n0 c3 n2 c5 n4 c7 n6We have to consider four cases. In the base case both octrees are completely filled. We just pair up their values.

We reduce the cases where one given octree is full and the other one has children to the fourth case where both octrees have children.

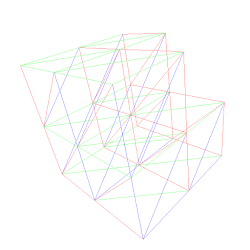

In the fourth case where both octrees have children we recurse. We have to be careful to pick the correct neighbours for the recursive calls. The following picture illustrates the idea with quadtrees:

The top two quadtrees depict the arguments and the bottom quadtree depicts the recursive calls. For example we recurse into the lower left quad with arguments c0 and c1.

Now that we know how to annotate an octree with neighbouring information, naive meshing for the positive X direction is:

naiveMeshPositiveX :: Octree Block -> [Face]

naiveMeshPositiveX octree =

mapMaybe neighbourFacePositiveX (

enumerate (annotate rootPath (

neighbour octree)))

neighbourFacePositiveX :: (Path, (Block, Block)) -> Maybe Face

neighbourFacePositiveX (path, (Solid, Air)) =

Just (cubeFacePositiveX (pathCube path))

neighbourFacePositiveX _ =

NothingThe naiveMeshPositiveX function enumerates all octree leafs annotated with their path and their neighbour’s value. It calls the neighbourFacePositiveX function on each annotated leaf and gathers up the results.

The neighbourFacePositiveX function takes a path paired with a pair of blocks. If the first block is solid and the second block is air we return a face for the cube that corresponds to the path. If not we return Nothing.

This only generates faces in the positive X direction. To generate faces for the other directions we have to mirror and transpose the octree before and after annotating each leaf’s neighbour and generate the cubes’ corresponding faces.

Benchmarks

The following table shows the number of faces for the ball scene generated by different algorithms for different resolutions:

| Resolution | Grid/stupid | Octree/stupid | Grid/naive | Octree/naive |

|---|---|---|---|---|

| 8 | 816 | 480 | 192 | 192 |

| 16 | 9,408 | 3,360 | 984 | 984 |

| 32 | 87,552 | 15,648 | 4,344 | 4,056 |

| 64 | 759,312 | 68,496 | 18,288 | 17,064 |

| 128 | 6,324,768 | 295,584 | 75,192 | 70,872 |

| 256 | 51,648,096 | 1,201,392 | 304,608 | 287,616 |

Naive meshing generates much fewer faces than stupid meshing.

The following table shows the time taken to produce the mesh for the ball with different resolutions. The time is in milliseconds.

| Resolution | Grid/stupid | Octree/stupid | Grid/naive | Octree/naive |

|---|---|---|---|---|

| 8 | 0.187 | 0.010 | 0.220 | 0.004 |

| 16 | 1.973 | 0.119 | 2.308 | 0.022 |

| 32 | 16.870 | 1.066 | 21.090 | 0.224 |

| 64 | 136.000 | 4.847 | 164.200 | 1.349 |

| 128 | 1154.000 | 20.930 | 1288.000 | 5.547 |

| 256 | 9186.000 | 85.760 | 10740.000 | 22.350 |

Meshing an octree is much faster than meshing a grid.

Thank you for reading the whole post. Comments are more than welcome!